All About Video Analytics & AI-Powered Analysis for CCTV & IP Security Cameras

By Justin C., Video Security System Specialist — A2Z Security Cameras

Last updated: August 18, 2025

TL;DR (buyer’s digest)

- Video analytics evolved from pixel motion → rules-based (tripwire, intrusion) → AI that recognizes people, vehicles, plates, PPE, and behaviors.

- You can run analytics on the camera (edge), on an NVR/VMS, on a server/cluster, or in the cloud—each trades latency, bandwidth, and scale differently.

- Edge AI gives fast, bandwidth-lean alerts; pairing with NVR/VMS unlocks cross-camera search, dashboards, and workflows.

- ONVIF Profile M standardizes the metadata format/transport, not full feature parity. Test what is published → ingested → searchable in your stack.

Looking specifically for in-camera analytics? See the companion deep-dive: AI-Powered Analytics in IP Cameras — Focused Guide to Edge AI.

What do we mean by “video analytics,” “AI,” and “video analysis”?

- Video analytics: Automated detection, classification, counting, and measurement over CCTV/IP streams (e.g., person in Zone A, vehicle line-crossing, LPR/ALPR read).

- AI-powered analytics: Deep-learning models (running on camera NPUs or servers/software) that understand what is in the scene and sometimes what’s happening.

- Video analysis: The broader practice, including manual review, evidence export, reports, and dashboards fed by analytics metadata.

Typical outputs: events, metadata (object class, attributes, zones/lines, timestamps, confidence), and bookmarks. These power alerts, search, reporting, and deterrence actions (lights/siren/voice-down).

From then to now: a brief history

1) Pixel-based motion detection (VMD)

- Monitors frame-to-frame pixel change.

- Pros: simple, low compute. Cons: false alerts (shadows, trees, headlights), no understanding of objects.

2) Rules-based “smart” analytics

- Tripwire/intrusion, object left/removed, tamper, basic people counting, dwell/loiter—built on classical computer vision.

- Better than raw motion, but still scene-sensitive (lighting, textures, crowding).

3) AI (deep learning) era

- Models detect people/vehicles, read license plates, and recognize attributes (vehicle type/color, PPE) with higher robustness and fewer nuisance alerts when scenes are designed carefully.

4) Edge AI with NPUs/NNA

- Cameras gain dedicated NPUs to run models on-device. Benefits: sub-second alerts, event-driven recording, lower backhaul, resilient with SD/NAS ANR backfill storage options, and simpler workloads.

5) Interoperability push

- ONVIF Profile M defines how analytics metadata is structured and transported between cameras and recorders/VMS. It greatly improves interoperability but does not force support for every attribute in every UI.

What’s next (practical horizon):

- More model concurrency on-camera, better cross-camera correlation, privacy-aware re-identification, sensor fusion (thermal/radar), and easier governance with auditable policies.

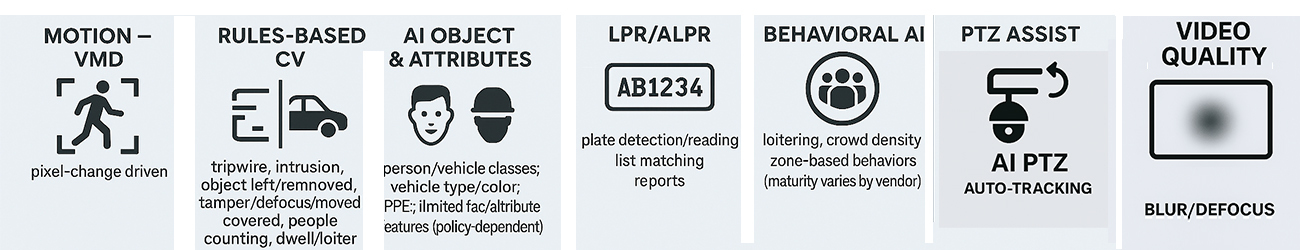

Taxonomy: the analytics you’ll actually see

- Motion / VMD: pixel-change driven.

- Rules-based CV: tripwire, intrusion, object left/removed, tamper/defocus/moved/covered, people counting, dwell/loiter, heatmaps.

- AI object & attributes: person/vehicle classes; vehicle type/color; PPE (helmet/vest); limited face/attribute features (policy-dependent).

- LPR/ALPR: plate detection/reading; list matching; reports.

- Behavioral AI: loitering, crowd density, zone-based behaviors (maturity varies by vendor).

- PTZ assist: AI-triggered auto-tracking or operator click-to-track; fixed-to-PTZ handoffs and other unique functions exist.

- Video quality analytics: blur/defocus, camera moved/covered for uptime/tamper alerts.

Where analytics run (and how to choose)

| Run Location | How it works | Strengths | Watch-outs | Best fit |

|---|---|---|---|---|

| Edge (in-camera) | NPU runs models locally; emits events/metadata | Lowest latency, lowest bandwidth; resilient with SD/NAS buffer | Model concurrency limits; UI depth depends on NVR/VMS | Remote/cellular, small sites, active deterrence |

| NVR / VMS (appliance/PC) | Recorder/VMS ingests streams and runs built-in analytics | Unified UI, cross-camera rules/search, centralized ops | Compute sizing; camera uplinks still needed | SMB/enterprise multi-camera review |

| Server/Cluster | Dedicated GPU servers run advanced models | Scale, specialized analytics, high accuracy | Cost/ops; bandwidth/storage planning | Large/enterprise, campuses, heavy analytics |

| Cloud/Hybrid | Cloud models take streams/clips/metadata | Anywhere access, fast feature velocity | Uplink constraints, privacy/regulatory | Distributed sites with strong uplink |

Rule of thumb: Edge for fast triggers and bandwidth savings; pair with NVR/VMS for search, dashboards, and incident timelines.

Interoperability basics: ONVIF Profiles S/T/M (plain English)

- Profile S: streaming & basic events.

- Profile T: H.265 and advanced imaging.

- Profile M: analytics metadata model/transport (objects, attributes, confidence, timestamps, zones/lines).

Reality check: Profile M standardizes the envelope, not the feature set. Confirm each attribute’s journey:

1) Published by the camera → 2) Ingested by the recorder/VMS → 3) Exposed & searchable in the UI.

Designing scenes for reliable analytics (field-tested)

| Factor | Why it matters | Practical guidance |

|---|---|---|

| Pixels on target | Models need subject size | People 80–100 px tall (waist-up 60–80 px workable); LPR 130–180 px plate width; angle <30° |

| Lens & framing | Detection vs detail | Prefer varifocal; avoid ultra-wide scenes that shrink targets |

| Lighting & shutter | Night blur ruins AI | Balanced IR/white light; faster shutter; suppress glare/hotspots |

| Frame rate | Motion fidelity | 15–20 fps typical; raise for traffic/LPR |

| Mount stability | Avoid false motion | Rigid mounts; damp vibration |

| Masks & zones | Fewer nuisance alerts | Mask roads/trees; lines perpendicular to motion; schedule by time |

Pilot the worst case (night/rain/headlights/backlight). Log false positives (FPs) and false negatives (FNs) before scaling.

Event pipelines, bandwidth & storage (what actually changes)

- Event-driven / dual-stream recording: High quality around events, low bitrate otherwise → major storage savings with preserved evidence.

- Metadata-first search: Filter by object, attributes, zone/line, time, and notes across many cameras.

- ANR/backfill: Camera buffers to microSD/NAS and backfills the recorder/VMS when links return.

- Evidence workflows: Auto-bookmarks, clip kits, and export audit trails for clean hand-offs.

Security & governance (practical guardrails)

- Harden devices: disable unused services, unique strong credentials, TLS where supported, rotate keys/certs.

- RBAC & MFA: role-based access; MFA on NVR/VMS; audit logs for exports/changes.

- Network posture: dedicated VLANs, least-privileged firewall rules, avoid direct Internet exposure.

- Privacy controls: masking for public views; retention limits; document who receives alerts and where footage goes.

- Patch policy: staged firmware rollouts and a rollback plan.

Benchmarking that sticks (acceptance template)

- Scenes: day/night, rain, backlight, busy background, headlights.

- Metrics: FP (false positive) rate, FN (false negative) rate, event-to-alert latency, missed-event counts.

- Targets: FP ≤ 2/hour in Zone A at night; LPR ≥ 95% at specified pixel/angle; latency ≤ 800 ms (edge), ≤ 1.5 s (via NVR rule).

- Settings log: firmware, model versions, masks/lines, thresholds, fps/shutter, codec/bitrate.

- Evidence kit: 5–10 clips per scenario, settings exports, short site notes.

- Sign-off: date, reviewer, deviations, next actions.

Edge vs NVR/VMS vs Server/Cloud—at a glance

| Capability / Ops | Edge-Only (Camera) | Hybrid (Camera + NVR/VMS) | Server-Heavy (VMS/Analytics) |

|---|---|---|---|

| Alert latency | Lowest | Low/Med | Med |

| Bandwidth use | Lowest | Low/Med | Med/High |

| Cross-camera search/rules | Limited | Advanced | Advanced |

| Admin overhead | Low | Med | High |

| Scalability (sites/cams) | Per camera | High | Highest |

| Best fit | Small/remote | Most SMB/enterprise | Large/enterprise/specialized |

Storage impact (illustrative)

| Recording Mode | Estimated Storage per Camera (GB/day) | Notes |

|---|---|---|

| Continuous Recording | 120 | Simple workflow; highest storage/backhaul |

| Event-Driven (Dual-Stream) | 45 | ~60% reduction; preserves high-quality around events |

Adjust to your scene tests (resolution, fps, motion).

Glossary (foundation)

- NPU / NNA: On-camera neural processing hardware for real-time AI.

- Analytics metadata: Structured descriptors (object class, attributes, zones, timestamps, confidence).

- ONVIF Profile S/T/M: S = streaming; T = H.265 & imaging; M = analytics metadata model/transport.

- ANR (Automatic Network Replenishment): Buffer to SD/NAS when links drop and backfill later.

- Model concurrency: How many analytics a camera can run simultaneously without degrading performance.

FAQs (foundation level)

Is pixel-based motion still useful?

Yes for basic presence, but expect more false alerts. Use masks/schedules or move up to rules/AI.

Do I need AI if I already use tripwires?

Tripwires work, but AI improves precision (e.g., only trigger on person crossing) and enables richer search.

Will AI increase bandwidth?

Usually no. Edge AI supports event-driven workflows that often reduce backhaul compared to constant high-bitrate streaming.

Is on-camera LPR enough?

For controlled approaches and proper framing, often yes. Complex angles/speeds may need server/cloud LPR.

Does ONVIF Profile M ensure full analytics parity?

No. It standardizes metadata exchange, not which events/attributes each vendor supports or exposes. Verify your exact camera ↔ NVR/VMS combo.

Conclusion & next steps

Video analytics have matured from pixel motion to AI-powered, metadata-rich detection you can search and act on. Choose where to run analytics (edge, NVR/VMS, server, cloud) based on latency, bandwidth, scene design, and scale—and validate with a short benchmark.

- For in-camera specifics (concurrency, Profile M, benchmarking), read the companion: AI-Powered Analytics in IP Cameras — Focused Guide to Edge AI.

- Need help mapping features to your NVR/VMS and designing reliable scenes? A2Z can help evaluate options, confirm integration, and source compatible AI IP cameras and recorders.

Next Steps

- Video Recorders (NVR/VMS)

- IP Security Cameras

- Read: Choosing the Right Resolution – 1080p, 4MP, 4K, and Beyond

- Read: Analog & HD CCTV vs. IP Cameras – Which is Right for You?

- Contact our expert team to build a coverage strategy.